Naives Bayes Classifier Which Cases It Works Best

Other popular Naive Bayes classifiers are. Typical applications include filtering spam classifying documents sentiment prediction etc.

Lecture 5 Bayes Classifier And Naive Bayes

Applications of Naive Bayes Algorithms.

. Naive Bayes assumes that all predictors or features. The baseline of spam filtering is tied to the Naive Bayes algorithm starting from the 1990s. It tends to be faster when applied to big data.

Despite this logical flaw Naive Bayes Classifiers do surprisingly well in situations where there is definitely not conditional independence between terms. Step 2 Dataset Summarization. Naive Bayes is suitable for solving multi-class prediction problems.

Healthcare professionals can use Naive Bayes to indicate if a patient is at high risk for certain diseases and conditions such as heart disease cancer and other ailments. Feature vectors represent the frequencies with which certain events have been generated by a multinomial distribution. Its best when data is categorical but work on all type of data.

Building a Naive Bayes Classifier in Python. Naïve Bayes Classifier is one of the simple and most effective Classification algorithms which helps in building the fast machine learning models that can make quick predictions. They are among the simplest Bayesian network models but coupled with kernel density estimation they can achieve high accuracy levels.

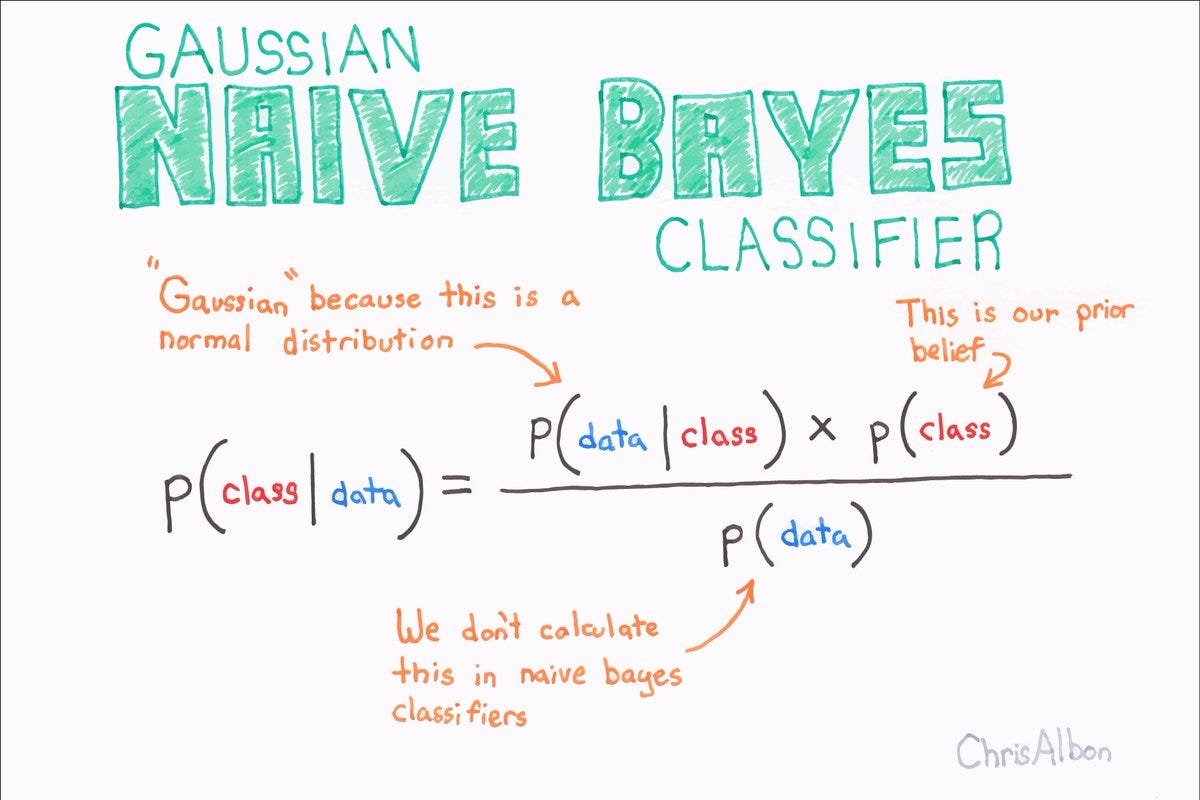

Gaussian Probability Density Function. It is based on the works of Rev. It is based on the assumption that the presence of one feature in a class is independent to the other feature present in the same class.

Record the distinct categories represented in the observations of the entire predictor. News Classification With the help of a Naive Bayes classifier Google News recognizes whether the news is political world news and so on. Which naive bayes to use when.

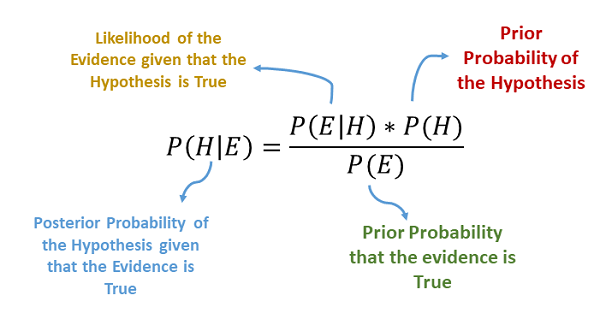

In the multivariate Bernoulli event model features are independent. Naive Bayes is a family of probabilistic algorithms that take advantage of probability theory and Bayes Theorem to predict the tag of a text like a piece of news or a customer review. Type Of Naive Bayes Classifiers.

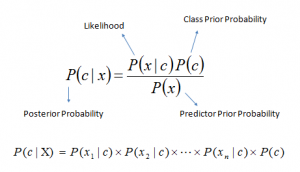

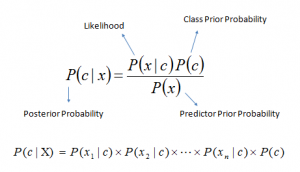

Thomas Bayes 1702 and hence the name. If speed is important choose Naive Bayes over K-NN. Naive bayes classifier calculates the probability of a class given a set of feature values ie.

In this article I explain how Naive Bayes classification works and present an example coded with the C language. This is the event model typically used for document classification. If its assumption of the independence of features holds true it can perform better than other models and requires much less training data.

Naive Bayes classifier is especially known to perform well on text classification problems. It is useful for making predictions and forecasting data based on historical results. Gaussian Naive Bayes.

There are plenty of standalone tools available that can perform Naive Bayes classification. The Naive Bayes is a classification algorithm that is suitable for binary and multiclass classification. Step 1 Class Separation.

Naïve Bayes performs well in cases of categorical input variables compared to numerical variables. Naive Bayes classifiers is a highly scalable probabilistic classifiers that is built upon the Bayes theorem. Where Bayes Excels.

Naive Bayes classifiers mostly used in text classification since it gives better result in multi-class problems where features are independent as they have higher success rate as compared to other algorithms. This article goes through the Bayes theorem make some assumptions and then implement a naive Bayes classifier from scratch. It is mainly used in text classification that includes a high-dimensional training dataset.

It is a probabilistic classifier which means it predicts on the basis of the probability of an object. There are three type of Naive Bayes Classifier in Scikit. Naive Bayes is better suited for categorical input variables than numerical variables.

Some widely adopted use cases include spam e-mail filtering and fraud detection. Works better with large data sets. Naive Bayes classifier construction using a multivariate multinomial predictor is described below.

The Naive in Naive Bayes Classification is because of this usually false and drastic assumption that the effects do not affect each other. Naive Bayes classification is a machine-learning technique that can be used to predict to which category a particular data case belongs. For example a fruit may be considered as watermelon if it is green round and about 15 cm in diameter.

Execution of Naive Bayes Classifier Tutorial for Python. Naive Bayes classifier belongs to a family of probabilistic classifiers that are built upon the Bayes theorem. For Continue Reading Promoted by The Penny Hoarder Should you leave more than 1000 in a checking account.

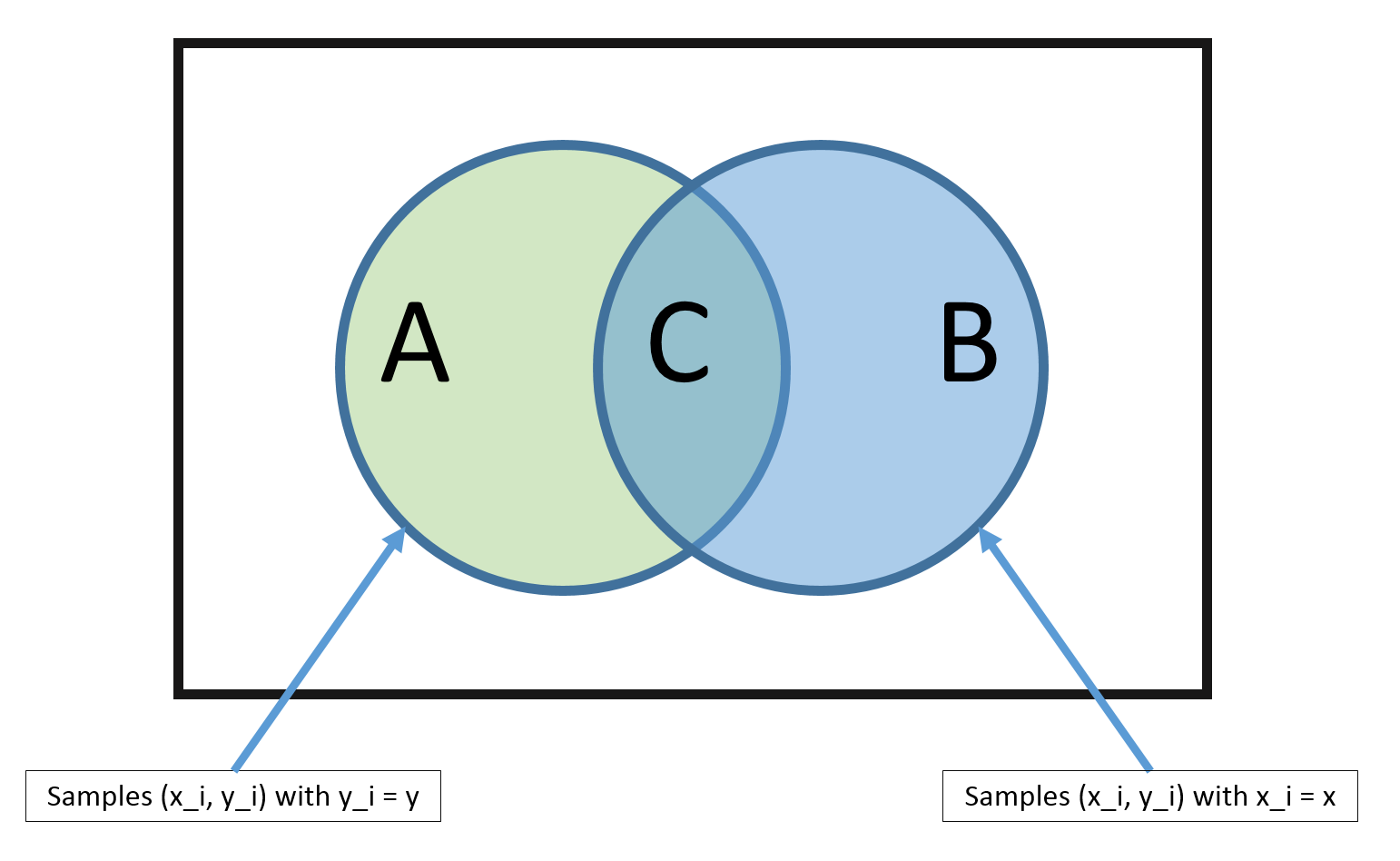

In text learning we have the count of each word to predict the class or label. Naive bayes is a supervised learning algorithm for classification so the task is to find the class of observation data point given the values of features. Some of the most popular uses for them are weather.

Where to Use Naives Bayes These classifiers are used behind the scenes of applications you access every day. In statistics naive Bayes classifiers are a family of simple probabilistic classifiers based on applying Bayes theorem with strong naive independence assumptions between the features see Bayes classifier. But why is it called Naive.

In comparison k-nn is usually slower for large amounts of data because of the calculations required for each new step in the process. Its time to see how Naive bayes classifier uses this theorem. The Naive Bayes algorithm is called Naive because it makes the assumption that the occurrence of a certain feature is independent of the occurrence of other features.

Pyi x1 x2. Naive Bayes classifiers are a family of simple probabilistic classifiers based on applying Bayes theorem with strongNaive independence assumptions between the features or variables. Naive Bayes is a probabilistic machine learning algorithm that can be used in a wide variety of classification tasks.

The Denominator of Multi-effect Bayes. To illustrate the steps consider an example where observations are labeled 0 1 or 2 and a predictor the weather when the sample was conducted. It has been successfully used for many purposes but it works particularly well with natural language processing NLP problems.

Because of the assumption of the normal distribution Gaussian Naive Bayes is used in cases when all our features are continuous. Step 3 Data Summary by Class. Multinomial NB all type of data like categorical numerical and text.

Naive Bayes is a linear classifier while K-NN is not. Perform well in multi-class classification. Best model in case if independent features.

Naive Bayes Classifier belongs to the family of probabilistic classifiers and is based on Bayes theorem.

Naive Bayes Classifier The Naive Bayes Classifier Technique Is By Rishabh Mall Medium

![]()

Naive Bayes Classifier Uc Business Analytics R Programming Guide

Learn Naive Bayes Algorithm Naive Bayes Classifier Examples

Naive Bayes Algorithm Exploring Naive Bayes Mathematics How By Bassant Gamal Analytics Vidhya Medium

No comments for "Naives Bayes Classifier Which Cases It Works Best"

Post a Comment